Difference between revisions of "GPU621/Fighting Mongooses"

Kyle Klerks (talk | contribs) (→Comparing STL/PPL to TBB: Sorting Algorithm) (Tags: Mobile edit, Mobile web edit) |

Kyle Klerks (talk | contribs) (→Fighting Mongooses) (Tags: Mobile edit, Mobile web edit) |

||

| Line 14: | Line 14: | ||

'''Entry on: November 30th 2016''' | '''Entry on: November 30th 2016''' | ||

| + | |||

| + | ==TBB== | ||

| + | TBB (Threading Building Blocks) is a high-level, general purpose, feature-rich library for implementing parametric polymorphism using threads. It includes a variety of containers and algorithms that execute in parallel and has been designed to work without requiring any change to the compiler. Uses task parallelism, Vectorization not supported. | ||

| + | |||

| + | ==BOOST== | ||

| + | BOOST provides free peer-reviewed portable C++ source libraries. Boost emphasizes libraries that work well with the C++ Standard Library. Boost libraries are intended to be widely useful, and usable across a broad spectrum of applications. Ten Boost libraries are included in the [http://www.open-std.org/jtc1/sc22/wg21/ C++ Standards Committee's] Library Technical Report ([http://www.open-std.org/jtc1/sc22/wg21/docs/papers/2005/n1745.pdf TR1]) and in the new C++11 Standard. C++11 also includes several more Boost libraries in addition to those from TR1. More Boost libraries are proposed for standardization in C++17. | ||

| + | |||

| + | Since 2006 an intimate week long annual conference related to Boost called [http://cppnow.org/ C++ Now] has been held in Aspen, Colorado each May. Boost has been a participant in the annual [https://developers.google.com/open-source/soc/?csw=1 Google Summer of Code] since 2007. | ||

| + | |||

| + | ==STD(PPL) – since Visual Studio 2015== | ||

| + | |||

| + | Visual C++ provides the following technologies to help you create multi-threaded and parallel programs that take advantage of multiple cores and use the GPU for general purpose programming: | ||

| + | |||

| + | '''Auto-Parallelization and Auto-Vectorization''' - Compiler optimizations that speed up code. | ||

| + | |||

| + | '''Concurrency Runtime''' - Classes that simplify the writing of programs that use data parallelism or task parallelism. | ||

| + | |||

| + | '''C++ AMP (C++ Accelerated Massive Parallelism)''' - Classes that enable the use of modern graphics processors for general purpose programming. | ||

| + | |||

| + | '''Multithreading Support for Older Code (Visual C++)''' - Older technologies that may be useful in older applications. For new apps, use the Concurrency Runtime or C++ AMP. | ||

| + | |||

| + | |||

| + | |||

| + | ==Auto-Parallelizer== | ||

| + | |||

| + | The /Qpar compiler switch enables automatic parallelization of loops in your code. When you specify this flag without changing your existing code, the compiler evaluates the code to find loops that might benefit from parallelization. Because it might find loops that don't do much work and therefore won't benefit from parallelization, and because every unnecessary parallelization can engender the spawning of a thread pool, extra synchronization, or other processing that would tend to slow performance instead of improving it, the compiler is conservative in selecting the loops that it parallelizes. | ||

| + | Multiple example loops [https://msdn.microsoft.com/en-ca/library/hh872235.aspx here] | ||

| + | |||

| + | ==Concurrency Runtime== | ||

| + | |||

| + | The Concurrency Runtime for C++ helps you write robust, scalable, and responsive parallel applications. It raises the level of abstraction so that you do not have to manage the infrastructure details that are related to concurrency. You can also use it to specify scheduling policies that meet the quality of service demands of your applications. Use these resources to help you start working with the Concurrency Runtime. | ||

| + | |||

| + | ==C++ AMP (C++ Accelerated Massive Parallelism)== | ||

| + | |||

| + | C++ AMP accelerates the execution of your C++ code by taking advantage of the data-parallel hardware that's commonly present as a graphics processing unit (GPU) on a discrete graphics card. The C++ AMP programming model includes support for multidimensional arrays, indexing, memory transfer, and tiling. It also includes a mathematical function library. You can use C++ AMP language extensions to control how data is moved from the CPU to the GPU and back. | ||

| + | |||

| + | ==AMP Tiling== | ||

| + | |||

| + | Tiling divides threads into equal rectangular subsets or tiles. If you use an appropriate tile size and tiled algorithm, you can get even more acceleration from your C++ AMP code. The basic components of tiling are: | ||

| + | · tile_static variables. Access to data in tile_static memory can be significantly faster than access to data in the global space (array or array_view objects). | ||

| + | · [https://msdn.microsoft.com/en-ca/library/hh308384.aspx tile_barrier::wait Method]. A call to tile_barrier::wait suspends execution of the current thread until all of the threads in the same tile reach the call to tile_barrier::wait | ||

| + | · Local and global indexing. You have access to the index of the thread relative to the entire array_view or array object and the index relative to the tile. | ||

| + | · tiled_extent Class and tiled_index Class. You use a tiled_extent object instead of an extent object in the parallel_for_each call. You use a tiled_index object instead of an index object in the parallel_for_each call. | ||

| + | |||

| + | [[File:PBTResults.png]] | ||

==Comparing STL/PPL to TBB: Sorting Algorithm== | ==Comparing STL/PPL to TBB: Sorting Algorithm== | ||

| Line 37: | Line 82: | ||

The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times. | The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times. | ||

| − | Conclusion | + | ==Conclusion== |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | The | + | The conclusion to draw when comparing TBB to STL, in their current states, is that you ideally should use TBB. STL parellelism is still very experimental and unrefined, and will likely remain that way until we see the release of C++17. However, following C++17’s release, using the native parallel library solution will likely be the ideal road to follow. |

---- | ---- | ||

Revision as of 23:06, 30 November 2016

Contents

Fighting Mongooses

Group Members

- Mike Doherty, Everything.

- Kyle Klerks, Everything.

Entry on: October 31st 2016

Group page (Fighting Mongooses) has been created suggested project topic is being considered

- - C++11 STL Comparison to TBB

Once project topic is confirmed by Professor Chris Szalwinski), I will be able to proceed with topic research.

Entry on: November 30th 2016

TBB

TBB (Threading Building Blocks) is a high-level, general purpose, feature-rich library for implementing parametric polymorphism using threads. It includes a variety of containers and algorithms that execute in parallel and has been designed to work without requiring any change to the compiler. Uses task parallelism, Vectorization not supported.

BOOST

BOOST provides free peer-reviewed portable C++ source libraries. Boost emphasizes libraries that work well with the C++ Standard Library. Boost libraries are intended to be widely useful, and usable across a broad spectrum of applications. Ten Boost libraries are included in the C++ Standards Committee's Library Technical Report (TR1) and in the new C++11 Standard. C++11 also includes several more Boost libraries in addition to those from TR1. More Boost libraries are proposed for standardization in C++17.

Since 2006 an intimate week long annual conference related to Boost called C++ Now has been held in Aspen, Colorado each May. Boost has been a participant in the annual Google Summer of Code since 2007.

STD(PPL) – since Visual Studio 2015

Visual C++ provides the following technologies to help you create multi-threaded and parallel programs that take advantage of multiple cores and use the GPU for general purpose programming:

Auto-Parallelization and Auto-Vectorization - Compiler optimizations that speed up code.

Concurrency Runtime - Classes that simplify the writing of programs that use data parallelism or task parallelism.

C++ AMP (C++ Accelerated Massive Parallelism) - Classes that enable the use of modern graphics processors for general purpose programming.

Multithreading Support for Older Code (Visual C++) - Older technologies that may be useful in older applications. For new apps, use the Concurrency Runtime or C++ AMP.

Auto-Parallelizer

The /Qpar compiler switch enables automatic parallelization of loops in your code. When you specify this flag without changing your existing code, the compiler evaluates the code to find loops that might benefit from parallelization. Because it might find loops that don't do much work and therefore won't benefit from parallelization, and because every unnecessary parallelization can engender the spawning of a thread pool, extra synchronization, or other processing that would tend to slow performance instead of improving it, the compiler is conservative in selecting the loops that it parallelizes. Multiple example loops here

Concurrency Runtime

The Concurrency Runtime for C++ helps you write robust, scalable, and responsive parallel applications. It raises the level of abstraction so that you do not have to manage the infrastructure details that are related to concurrency. You can also use it to specify scheduling policies that meet the quality of service demands of your applications. Use these resources to help you start working with the Concurrency Runtime.

C++ AMP (C++ Accelerated Massive Parallelism)

C++ AMP accelerates the execution of your C++ code by taking advantage of the data-parallel hardware that's commonly present as a graphics processing unit (GPU) on a discrete graphics card. The C++ AMP programming model includes support for multidimensional arrays, indexing, memory transfer, and tiling. It also includes a mathematical function library. You can use C++ AMP language extensions to control how data is moved from the CPU to the GPU and back.

AMP Tiling

Tiling divides threads into equal rectangular subsets or tiles. If you use an appropriate tile size and tiled algorithm, you can get even more acceleration from your C++ AMP code. The basic components of tiling are: · tile_static variables. Access to data in tile_static memory can be significantly faster than access to data in the global space (array or array_view objects). · tile_barrier::wait Method. A call to tile_barrier::wait suspends execution of the current thread until all of the threads in the same tile reach the call to tile_barrier::wait · Local and global indexing. You have access to the index of the thread relative to the entire array_view or array object and the index relative to the tile. · tiled_extent Class and tiled_index Class. You use a tiled_extent object instead of an extent object in the parallel_for_each call. You use a tiled_index object instead of an index object in the parallel_for_each call.

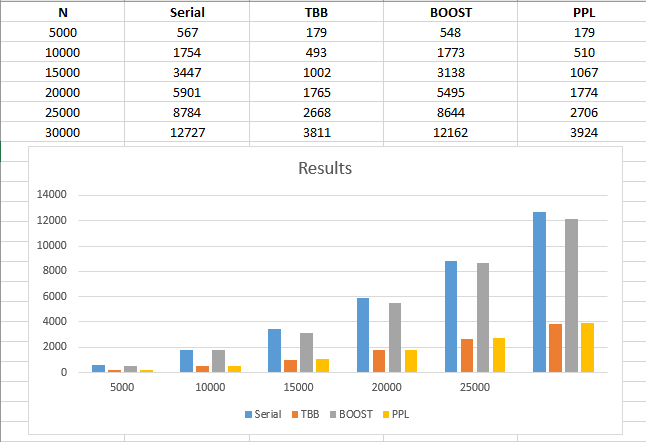

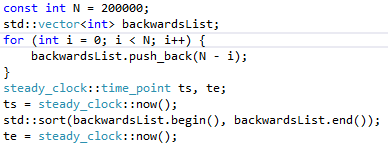

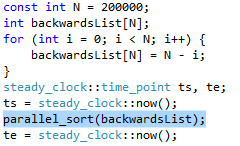

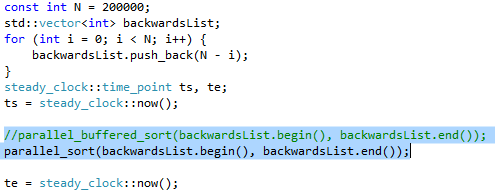

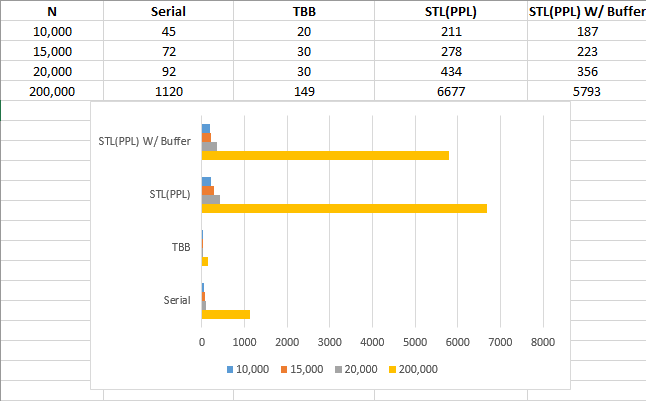

Comparing STL/PPL to TBB: Sorting Algorithm

Let’s compare a simple sorting algorithm between TBB and STL/PPL.

Serial

TBB

STL

Results

The clear differentiation in the code is that TBB does not have to operate using random access iterators, while STL’s parallel solution to sorting (and serial solution) does. If TBB sort is run using a vector instead of a simple array, you will see more even times.

Conclusion

The conclusion to draw when comparing TBB to STL, in their current states, is that you ideally should use TBB. STL parellelism is still very experimental and unrefined, and will likely remain that way until we see the release of C++17. However, following C++17’s release, using the native parallel library solution will likely be the ideal road to follow.