Difference between revisions of "OPS345 Assignment 1"

(→Part 3: two more slaves) |

(→Why this assignment is stupid) |

||

| Line 110: | Line 110: | ||

= Why this assignment is stupid = | = Why this assignment is stupid = | ||

| + | |||

| + | You're likely not ready for real load balancing on AWS, this assignment is the most I figured is reasonable to ask an average to do. So this assignment has some significant problems: | ||

| + | |||

| + | # Your website will be down for 25% of the requests for each server that's offline, since your load balancer doesn't know whether the slaves are alive or not. | ||

| + | # The storage is only synced in the worst case every 5 minutes (10 if the change is on a slave), that's unacceptably slow for current web applications. | ||

| + | # The two-way rsync syncing you set up won't syncronize deleting files. | ||

| + | # This sort of load balancing won't work with Nextcloud or most other non-trivial web applications that use cookies. | ||

| + | |||

| + | We might fix some of these problems later in OPS345. | ||

Revision as of 01:55, 26 January 2022

Contents

Overview

In this assignment you'll use many of the skills you learned so far to set up several Apache web servers with a lame load balancer. It won't be even close to production-ready but you will get more practice with the basics, which is what you need most now.

This assignment assumes that your www.youruserid.ops345.ca is a working web server. If you didn't complete that part of Lab 3: you'll need to do it first.

The format of the assignment is similar to a lab, but it's less specific about the exact steps you need to take. You're expected to show more independent learning abilities for an assignment than for a lab. The extra complicated parts are clarified for you here.

In short, a complete assignment will show that you can:

- Create AMIs from an existing VM and deploy new VMs based on that AMI.

- Use SSH keys, rsync, and cron to keep data on multiple servers synchronized.

- Use iptables as an Apache load balancer by directing traffic to a random slave.

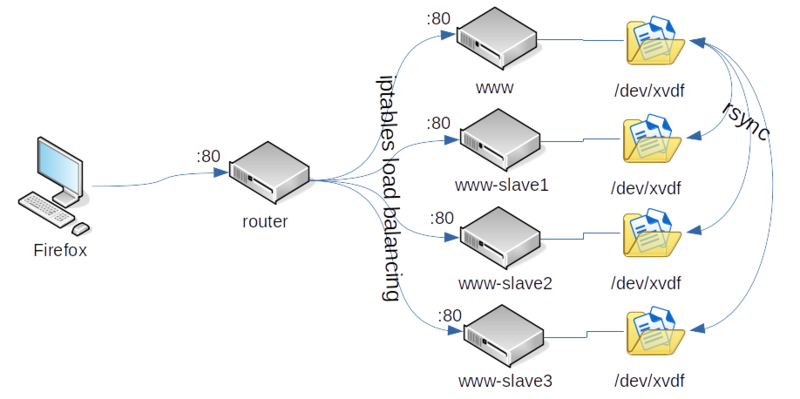

This is the overview of your completed work in the form of a diagram:

Part 1: first slave

- Go to your www VM in the AWS Console and find the button to create an image from it.

- Name the image www-for-asg1-p1

- This will create an AMI with all the software configured the way you configured it.

- Deploy one new VM from the AMI you created above.

- Name it www-slave1

- With primary IP address 10.3.45.21

- In ops345wwsg

- Make sure the second virtual drive is named www-data-slave1

- Add the appropriate iptables rule on router (don't forget to save the iptables rules) and ops345routersg rule to allow yourself to SSH to www-data-slave1 via port 2221.

- Don't change the hostname of www-data-slave1, leave it as "www".

Sync files with www

Each of your web servers (www and all the slaves) need to have the same data on them. That means you need to synchronize the contents of /var/www/html. You might recall this is mounted from a separate drive (/dev/xvdf) but that doesn't matter for this assignment.

You'll use rsync to do the synchronization, but first you need to set up your user on www-slave1 to be able to ssh to www without a password.

- Create an ssh key on www-slave1 as your regular user. Make sure the key is stored in /home/yourusername/.ssh/id_rsa_wwwsync

- On www edit /home/yourusername/.ssh/authorized_keys

- Paste the contents of /home/yourusername/.ssh/id_rsa_wwwsync.pub from www-slave1 to the end of that file as one line.

- Test your key authentication setup as yourusername on www-slave1 to confirm you can log in to yourusername@10.3.45.11 (www) without a password:

ssh -i /home/yourusername/.ssh/id_rsa_wwwsync yourusername@10.3.45.11

Now set up rsync:

- Create a new file in /var/www/html on www and use this command on www-slave1 to make sure that new file is copied to www-slave1:

rsync -e "ssh -i ~/.ssh/id_rsa_wwwsync" -au --exclude="nextcloud" yourusername@10.3.45.11:/var/www/html/* /var/www/html

- Create a new file in /var/www/html on www-slave1 and use this command on www-slave1 to make sure that new file is copied to www:

rsync -e "ssh -i ~/.ssh/id_rsa_wwwsync" -au --exclude="nextcloud" /var/www/html/* asmith15@10.3.45.11:/var/www/html

- Once you confirm both rsync commands above work: make them run automatically every 5 minutes by editing your user's crontab on www-slave1:

*/5 * * * * rsync -e "ssh -i ~/.ssh/id_rsa_wwwsync" -au --exclude="nextcloud" asmith15@10.3.45.11:/var/www/html/* /var/www/html */5 * * * * rsync -e "ssh -i ~/.ssh/id_rsa_wwwsync" -au --exclude="nextcloud" /var/www/html/* asmith15@10.3.45.11:/var/www/html

- Test that by creating some files on www, some other files on www-slave1, and waiting more than 5 minutes.

Part 2: iptables load balancing

You already have HTTP (port 80) traffic forwarded from router to www. That means you've already done most of the work to set up iptables to do the load balancing.

- Confirm that you will see your website by going to your router's public IP with a web browser. If it doesn't work: go back to lab 3 and figure out why.

- When you're sure it works: save a backup copy of your iptables rules just in case:

cp /etc/sysconfig/iptables /root/iptables-before-asg1

You can restore the working set of rules if you make a big mess, but try not to: you may lock yourself out of router altogether, and then you won't be able to restore the original rules either.

- Remove the existing port 80 rule from your nat table. Find the rule number with:

iptables -L -n -t nat

- Add two new rules to send 50% of the incoming requests for port 80 to www, and the rest to www-slave-1:

iptables -t nat -A PREROUTING -p tcp -m tcp --dport 80 -m statistic --mode random --probability 0.5 -j DNAT --to-destination 10.3.45.11:80 iptables -t nat -A PREROUTING -p tcp -m tcp --dport 80 -j DNAT --to-destination 10.3.45.21:80

The two rules above are based on Yann Klis's blog post. You should read that so you understand how they work.

- Test that your load balancer works by looking at the logs on both web servers and reloading your webpage in Firefox. After about 8 requests from Firefox the new requests will be directed to the other servers:

tail -f /var/log/httpd/access_log

- You can also see the private IP address on your web page change: that's the actual IP address of the server processing the request, not the IP address of the load balancer.

Part 3: two more slaves

Once you're happy with all your work above: you are ready to create two more slaves and distribute the web server load across all four of your web servers.

- Create another AMI, this time from www-slave1 instead of www. Name it www-for-asg1-p3

- Deploy www-slave2 with ip address 10.3.45.22 and www-slave3 with ip address 10.3.45.23 from your new image.

- Do the work you need to allow SSH access to those two VMs. You won't need to change anything on them, they will be identical to www-slave1, but SSH access will help you make sure your work is done properly.

- Modify the iptables rules on your load balancer to make sure the load is distributed equally among all four web servers.

Part 4: load test

Refreshing your webpage in Firefox over and over again is not the best way to test your load balancer. You'll set up a Python script to do it instead. I wrote most of it for you:#!/usr/bin/env python3

# asg1Test.py

# Test for OPS345 Assignment 1

# Author: Andrew Smith

# Student changes by: Your Name Here

import os

import re

numRunsLeft = 10

numMain = 0

numSlave1 = 0

while numRunsLeft > 0:

output = os.popen("curl --no-progress-meter http://3.210.171.214/")

curlOutput = output.read()

ip = re.search('10\.3\.45\...', curlOutput)

if ip[0] == '10.3.45.11':

numMain = numMain + 1

elif ip[0] == '10.3.45.21':

numSlave1 = numSlave1 + 1

numRunsLeft = numRunsLeft - 1

print('Hits on main www server: ' + str(numMain))

print('Hits on www-slave1 server: ' + str(numSlave1))All that's left for you to do is:

- Put your name in the comments at the top.

- Fix the part that's supposed to connect to your server instead of mine.

- Add to the script so that it counts slave2 and slave3 as well, and prints the results at the end.

- Once you're happy with the above: make it run for 60 seconds instead of 10 and record the results. The number of requests should be split almost perfectly between your for web servers.

Submission

Why this assignment is stupid

You're likely not ready for real load balancing on AWS, this assignment is the most I figured is reasonable to ask an average to do. So this assignment has some significant problems:

- Your website will be down for 25% of the requests for each server that's offline, since your load balancer doesn't know whether the slaves are alive or not.

- The storage is only synced in the worst case every 5 minutes (10 if the change is on a slave), that's unacceptably slow for current web applications.

- The two-way rsync syncing you set up won't syncronize deleting files.

- This sort of load balancing won't work with Nextcloud or most other non-trivial web applications that use cookies.

We might fix some of these problems later in OPS345.