Difference between revisions of "DPS921/ASCII"

(→OpenCV) |

(→Group : NoName) |

||

| (45 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | = | + | =Group : Pixels2= |

===Team Members=== | ===Team Members=== | ||

# [mailto:kyuriy@myseneca.ca?subject=DPS921 Alex] | # [mailto:kyuriy@myseneca.ca?subject=DPS921 Alex] | ||

| Line 5: | Line 5: | ||

# [mailto:kyuriy@myseneca.ca?subject=GPU621 Yuriy Kartuzov] | # [mailto:kyuriy@myseneca.ca?subject=GPU621 Yuriy Kartuzov] | ||

| − | === | + | |

| + | ===Presentation=== | ||

Google Slides presentation can be found | Google Slides presentation can be found | ||

[https://docs.google.com/presentation/d/1Py6n332lNK9oVpiJ1-IGasvRQA5EXM52pXsKsKi4cBs/edit?usp=sharing here] | [https://docs.google.com/presentation/d/1Py6n332lNK9oVpiJ1-IGasvRQA5EXM52pXsKsKi4cBs/edit?usp=sharing here] | ||

| − | ==ASCII Art | + | |

| + | ==ASCII Art== | ||

===Introduction=== | ===Introduction=== | ||

The idea is take an image and turn it into a pictorial representation using ascii character. We use PNG as input and TXT file as output. The idea with TXT format is that they can be pasted into editors, their font can be modified, text colour and background changed for a customized look. | The idea is take an image and turn it into a pictorial representation using ascii character. We use PNG as input and TXT file as output. The idea with TXT format is that they can be pasted into editors, their font can be modified, text colour and background changed for a customized look. | ||

| Line 15: | Line 17: | ||

We decided to take it a step further and output a PNG file as well. This loses some of the functionality mentioned above, however now we are able to process videos since we can take a frame and run our algorithm through it. Having a video will also highlight how efficient our algorithm can run and whether we can keep up with live processing. | We decided to take it a step further and output a PNG file as well. This loses some of the functionality mentioned above, however now we are able to process videos since we can take a frame and run our algorithm through it. Having a video will also highlight how efficient our algorithm can run and whether we can keep up with live processing. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | ===<span style="color:#29bc61">OUTPUT Samples</span>=== |

| − | [[File: | + | #[https://drive.google.com/open?id=1XdiQNKSioRO4BhRdWrnoORsk-OKVm_Yb Live video stream (MP4)] |

| − | + | #[https://drive.google.com/open?id=1TNntn00V9z6jSreNNxXtbVBMUgru0TD5 Video file processing (MKV)] | |

| + | #[https://drive.google.com/open?id=1RyMGe1UBu03sR5hCYzS6r4Gk_GEQlDLn Reverse sampling with white colour font and black colour background (PNG)] | ||

| + | #[https://drive.google.com/open?id=16L94F9Za4bmryrSS7cEV_oWbfp3vl4vB R2D2 above (TXT)] | ||

| + | '''Note:''' for best video quality download the files for view since Google compresses them further for playback in browsers. For a text file you'll need to decrease font such that no lines wrap unto to the next line and don't forget to use '''''monospace''''' font. | ||

| + | |||

| + | ===Working with image and video files=== | ||

| + | [[File:Opencv.jpg|100px]] | ||

| + | is an open source computer vision library which can do a lot of cool stuff. As beginners we use it for: | ||

*Read and write '''image''' files | *Read and write '''image''' files | ||

*Read and write '''video''' files | *Read and write '''video''' files | ||

*Read from video stream like '''camera''' | *Read from video stream like '''camera''' | ||

| − | * | + | *Display video in native '''OS window''' |

| + | |||

| + | OpenCV give us a native Matrix object that represents a frame in a video or live feed. We can easily extract an array of unsigned chars from it. In C++ unsigned char hold an integer value from 0 - 255. This number represents the luminosity of a colour or grayscale channel. 0 being complete black and 255 pure white. This is also the same range we use for front-end work with CSS attribute:function ( '''background-color: rgb(0, 191, 255)''' ). For the test below we use RGB or Red Green and Blue channels to calculate luminosity ourselves for more areas to optimize. However, for simplicity in explaining Algorthim in the next section, we are going to assume that OpenCV already gives us a greyscale frame, as it has ability to do so. | ||

| + | |||

| + | |||

| + | This means that for a standard HD frame 1920 pixels by 1080 pixels we are given an array with 2,073,600 elements( unsigned char * data[2,073,600];) If we are processing a live feed at 30 frames per second we have a time constraint to process 62,208,000 elements (30 * 2,073,600) per second. From a perspective of a single frame, 1/30 that means have to process a frame under 33 milliseconds so as not to drop any frames. | ||

| + | |||

| + | ===Algorithm and Pseudocode=== | ||

| + | |||

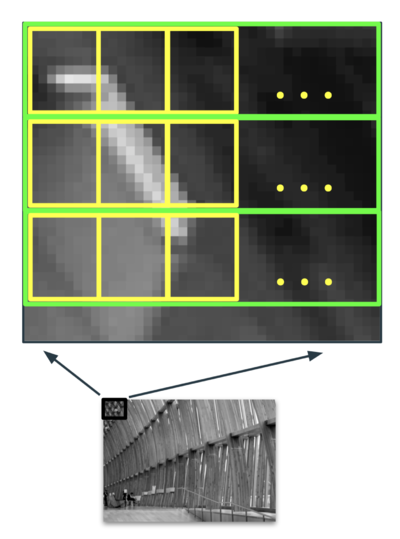

| + | The implementation idea of ASCII art, is to break up an image into chunks represented by yellow boxes in the picture below. This means we have to iterate chunks in a line and then we iterate on lines of chunks - green boxes. This will form two outer-most loops in our code. | ||

| + | |||

| + | [[File:zoomed.png|400px]] | ||

| + | |||

| + | Then we think of processing a chunk itself. We need to average the value of all pixels within it to a single value. This requires us to iterate over each pixel in a chunk. Added complexity in indexing the pixels arises from the fact that lines of pixels are not contiguous in memory. This iteration forms the 2 inner-most loops in our algorithm. | ||

| + | |||

| + | [[File: chunk.png|150px]] | ||

| + | |||

| + | Once we have a single value we need to map its luminosity to a character we are going to replace it with. The character templates are small images we have in our project folder (Pound.png, AT.png W.png etc.) These are small images 7 by 11 pixels only, and we read all images into 2D array. The dimensions of our character template determines the size of the chunk. We have experimented with different size templates and found that smaller font to be preferable. Once we know which character we want to print we will copy, pixel by pixel from our template into output array of unassigned chars of the same length as our original frame. | ||

| + | |||

| + | [[File:charTmpl.png|150px]] | ||

| + | |||

| + | Iterating over character template forms the second pair of inner-most loops. The pseudocode to do this is below. And while it may look like we have 4 nested loops the runtime complexity of our algorithm is O(N) since we are iterating over each pixel in an image exactly once. Because we are able to break down work into chunks, their processing can be done independently of each other. This problem can be classified as '''Embarrassingly parallel''' problem, however I prefer a new proposed term a '''perfectly parallel''' problem. (Source: [https://en.wikipedia.org/wiki/Embarrassingly_parallel]) | ||

| + | |||

| + | [[File:codePxl.png|300px]] | ||

| + | |||

| + | ==vTune Amplifier with OpenMP== | ||

| + | |||

| + | '''vTune Amplifier Overview:''' | ||

| + | |||

| + | We used vTune Amplifier to profile our code to check it's initial performance. | ||

| + | |||

| + | vTune Amplifier is a code profiler that can identify: | ||

| + | * Where time is being spent during the code’s execution | ||

| + | * Amount of concurrency in the code | ||

| + | * Bottlenecks created by synchronous primitives | ||

| + | |||

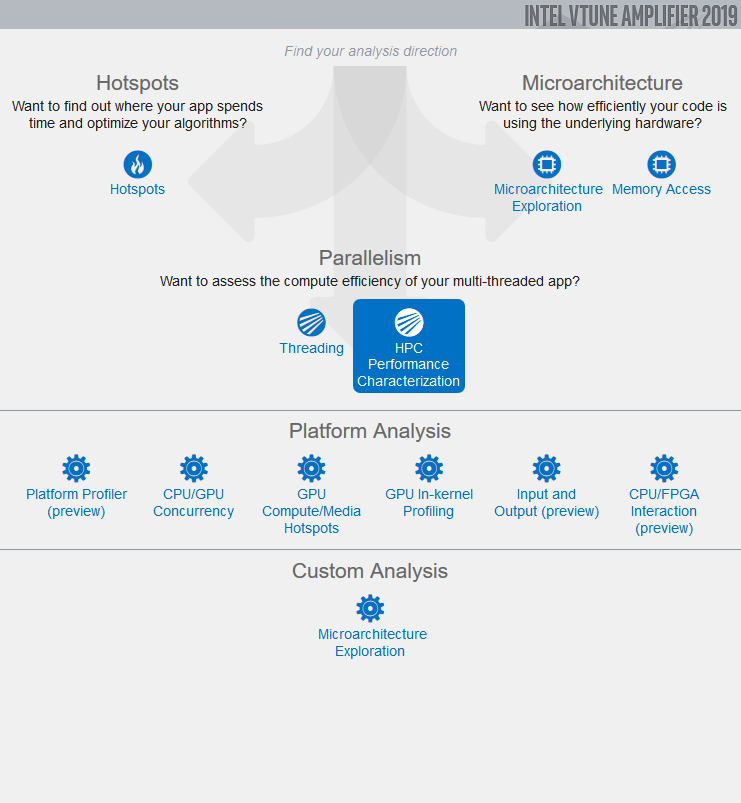

| + | vTune Amplifier has multiple analysis it can perform but we analyzed the ASCII art program with the '''Hotspot Analysis''' and '''HPC Performance Characterization'''. | ||

| + | |||

| + | To preface the following analysis, this was done using a 30 second video at 1080p. | ||

| + | |||

| + | [[File: Vt_types.png]] | ||

| + | |||

| + | The initial hotspot analysis of our serial code took '''44.904 seconds''' to complete with the '''imageToTextScaledNative''' function as the top hotspot. | ||

| + | [[File:Ascii_art_serial_hotspot.png]] | ||

| + | |||

| + | Looking at the thread visualization pane we can see that only one thread is used by the serial implementation of the function. | ||

| + | [[File:Ascii_art_serial_threads.png]] | ||

| + | |||

| + | To fix this we added the statement: | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | |||

| + | to our code. So referring to our sudocode, the new code looked something like this: | ||

| + | |||

| + | #pragma omp parallel for | ||

| + | for( j ) { | ||

| + | for( k ) { | ||

| + | int sum=0 | ||

| + | for(y) { | ||

| + | for(x) { | ||

| + | int index // using j, k x, y | ||

| + | sum += input[index]; | ||

| + | } | ||

| + | } | ||

| + | int ave // get average using sum | ||

| + | for(y) { | ||

| + | for(x) { | ||

| + | int index // using j, k x, y | ||

| + | int charIndex // using x, y | ||

| + | output[index] = ascii[charIndex] | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | |||

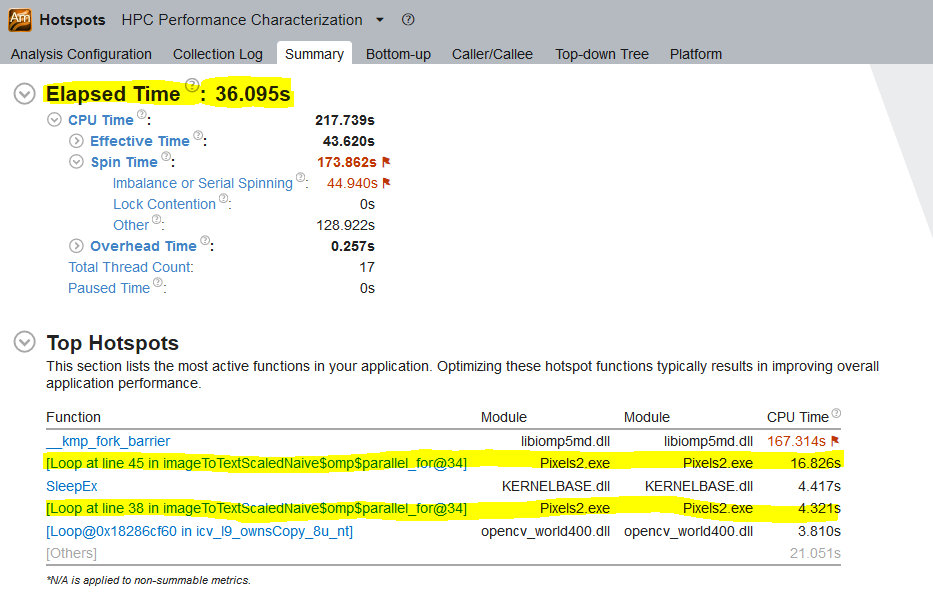

| + | This improved our overall runtime and reduced it to '''36.095 seconds'''. | ||

| + | |||

| + | [[File:Ascii_art_parallel_hotspot.png]] | ||

| + | |||

| + | Note that our overall CPU time has increased. This is a good sign as that means that we are utilizing our CPU more. | ||

| + | |||

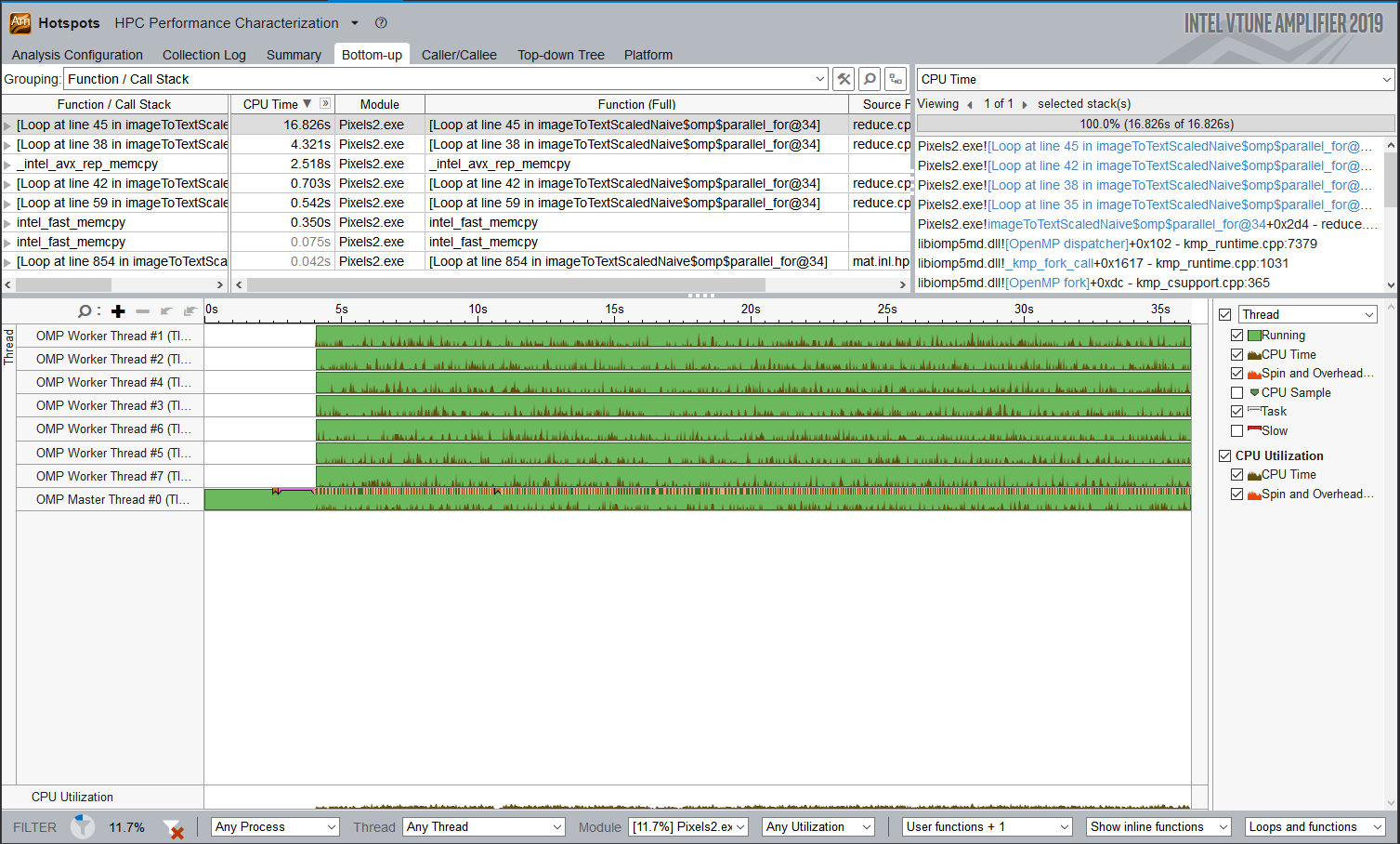

| + | Looking at our thread usage we see that we are using 8 threads instead of 1 and the presence of the '''omp$parallel''' attached to our function. | ||

| + | |||

| + | [[File:Ascii_art_parallel_threads.png]] | ||

| + | |||

| + | Note the pink section on the master thread. That section represents OpenCV initializing the window the resulting video is displayed on. This is outside the scope of our code so we assume that it is uncontrollable overhead. | ||

| + | |||

| + | The orange blocks show the parallel regions where we process and convert each frame and the gap between is the task where OpenCV grabs each individual frame from the source. | ||

| + | |||

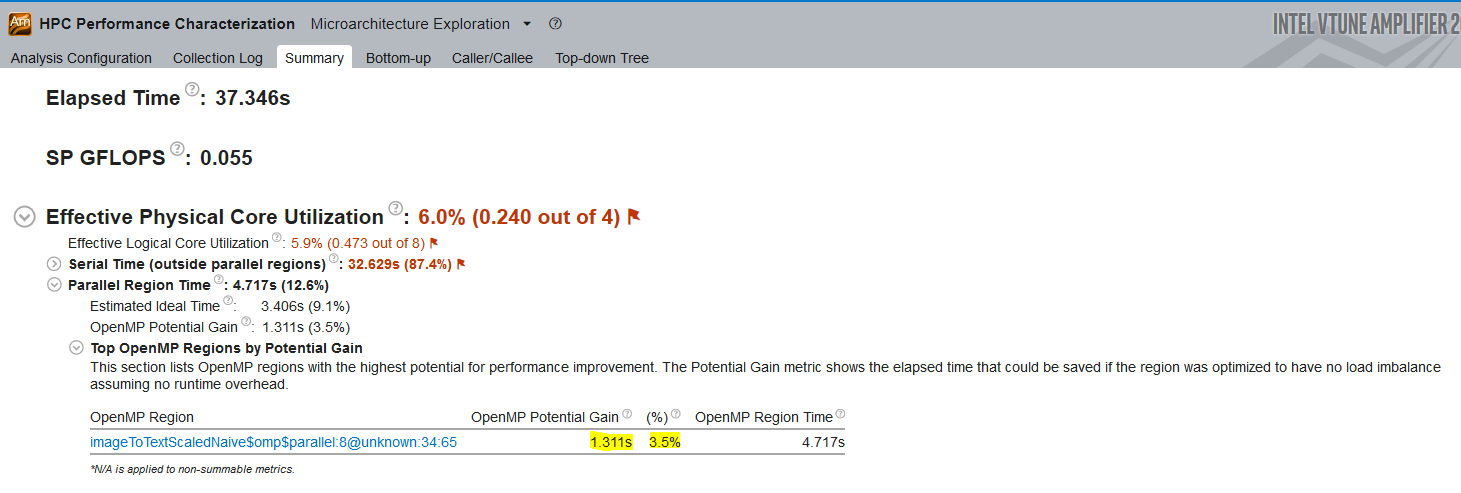

| + | Then we ran our code through the HPC Performance Characterization analysis to verify the efficiency of our OpenMP implementation. | ||

| + | |||

| + | [[File:Ascii_art_hpc_og.png]] | ||

| + | |||

| + | This analysis showed that we could potentially gain '''1.311''' seconds of runtime if we optimized OpenMP. This occurred because there is an imbalance in the work distribution of our threads which means one or more threads complete their task before the other threads. This means they are idle and wait at the barrier for the other threads to complete their work ultimately resulting in unused processing power. | ||

| + | |||

| + | To fix this we added dynamic scheduling to our code so the workload is dynamically allocated so when one thread finishes their work and there is more work to be done, the thread will pick up more work. | ||

| + | |||

| + | So our pragma statement was changed to: | ||

| + | |||

| + | #pragma omp parallel for schedule(dynamic) | ||

| + | |||

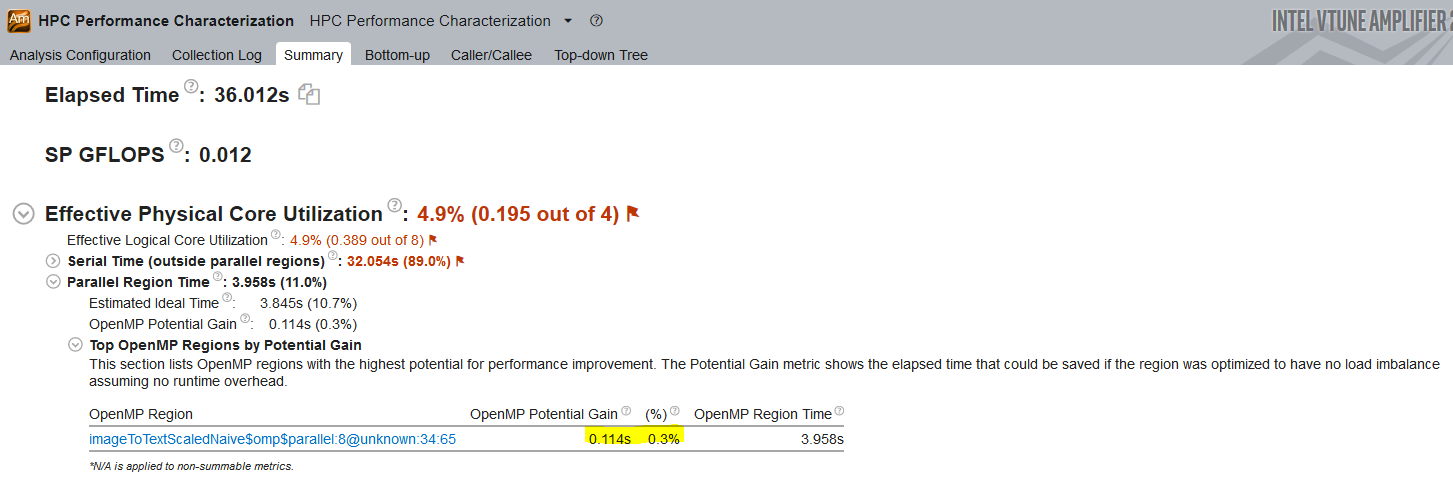

| + | Running our code again through a HPC Performance Characterization analysis yielded these results: | ||

| + | |||

| + | [[File:Ascii_art_hpc_cur.png]] | ||

| + | |||

| + | ==Intel Adviser== | ||

| + | |||

| + | '''What can the Advisor Do:''' | ||

| + | |||

| + | 1. Vectorization Optimization | ||

| + | * Use the cache-aware Roofline Analysis to identify high-impact, under-optimized loops and get tips for faster code. | ||

| + | * Quickly find what's blocking vectorization where it matters most to make the best use of your machine's Single Instruction Multiple Data (SIMD) capabilities. | ||

| + | * Identify where it is safe to force compiler vectorization. | ||

| + | * Use memory analysis to find inefficient memory usage. | ||

| + | |||

| + | 2. Thread Prototyping | ||

| + | * Use Threading Advisor to fast-track threading design. | ||

| + | * Its simple workflow lets you quickly model threading designs while delivering the data and tips you need to make faster design and optimization decisions. | ||

| + | |||

| + | (More could be found https://software.intel.com/en-us/advisor) | ||

| + | |||

| + | ---- | ||

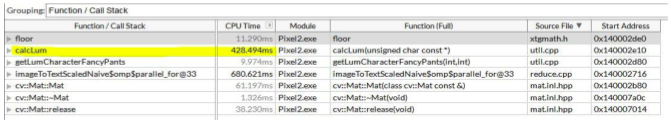

| + | Our Hotspot analysis from vTune(see previous section for details on vTune) identified that there are two bottlenecks in our code: | ||

| + | * imageToScaleeNaive - the main conversion function that creates image drawn with characters from the original buffer. | ||

| + | * calcLum - averages out the RGB values of a single pixel to convert it to grey scale. | ||

| + | |||

| + | See the details below: | ||

| + | |||

| + | [[File:Ascii vtune runtime.png]] | ||

| + | |||

| + | As you can see, '''calcLum''' takes the second highest time. However, it does not have a single loop, and really only has a single line of code. | ||

| + | |||

| + | int calcLum(const unsigned char *pixels) | ||

| + | { | ||

| + | return (0.2126 * (int)pixels[2]) + (0.7152 * (int)pixels[1]) + (0.0722 * (int)pixels[0]); | ||

| + | } | ||

| + | |||

| + | My initial thoughts were to take the code form the functions and just put it inside the loop, after all it is only called in a single place in the code and by putting it inside the main function, I would save on memory stack allocation. | ||

| + | |||

| + | [[File:ascii_variable_sizes.JPG]] | ||

| + | |||

| + | In the snippet above, I moved hard-coded variables into constants that are declared before the loop starts as well as assigned each RGB value to an int variable. The last bit is needed because we need to get ASCII codes of each character to get its intensity value. | ||

| + | |||

| + | |||

| + | In doing so, I got the following results: | ||

| + | |||

| + | [[File:ascii_data_type_missmatch_refactoring.JPG]] | ||

| + | |||

| + | The above indicates that now we have 3 datatype miss-matches that take additional time on each loop to convert from char to int and then from int to float for the final calculation of the sum. | ||

| + | |||

| + | === Solution === | ||

| + | |||

| + | Unfortunately, sometimes there is no way around the datatype conversion, as it is the only way to retrieve the information. So all we can do now is minimize the impact. | ||

| + | |||

| + | [[File:ascii_solution.JPG]] | ||

| + | |||

| + | The final solution above involves making sure that the data types stay consistent across the calculation by converting chars straight to floats and using float constants, thus only making a single type cast for every bracket pair. | ||

| + | |||

| + | The final solution achieved a speedup of around '''200ms''' on a 10s video clip. | ||

| + | |||

| + | === Summary === | ||

| + | |||

| + | Our code changes involved: | ||

| + | 1. Parallelized our code with the '''#pragma omp parallel for''' statement. | ||

| + | * This improved our runtime to 5(+- 1 second) seconds of OpenCV overehead + length of video for a 1080p video | ||

| + | 2. Added dynamic scheduling with our code | ||

| + | * Improvement on load balancing. There was minimal runtime gain on our test videos because initial parallelization already optimized the code to handle frames as fast as they are retrieved | ||

| + | 3. Eliminated the data type conversion in our logic | ||

| + | * Improvement on memory management. There was minimal runtime gain on our test videos because initial parallelization already optimized the code to handle frames as fast as they are retrieved however the section itself showed a 50% improvement in runtime | ||

| + | |||

| + | To preface our results: | ||

| − | + | We used OpenCV to process and display our results. This added roughly 5 seconds of overhead added by OpenCV to create the Window and the related processes required to display the resulting video. This is outside of our control. | |

| − | + | After our initial parallelization improvements our bottleneck is no longer our code but the speed at which we get frames from the source so our improvements do not improve our runtime with a 1080p video but could help maintain this level of execution on higher resolution videos. | |

Latest revision as of 23:09, 11 December 2018

Contents

Group : Pixels2

Team Members

Presentation

Google Slides presentation can be found here

ASCII Art

Introduction

The idea is take an image and turn it into a pictorial representation using ascii character. We use PNG as input and TXT file as output. The idea with TXT format is that they can be pasted into editors, their font can be modified, text colour and background changed for a customized look.

We decided to take it a step further and output a PNG file as well. This loses some of the functionality mentioned above, however now we are able to process videos since we can take a frame and run our algorithm through it. Having a video will also highlight how efficient our algorithm can run and whether we can keep up with live processing.

OUTPUT Samples

- Live video stream (MP4)

- Video file processing (MKV)

- Reverse sampling with white colour font and black colour background (PNG)

- R2D2 above (TXT)

Note: for best video quality download the files for view since Google compresses them further for playback in browsers. For a text file you'll need to decrease font such that no lines wrap unto to the next line and don't forget to use monospace font.

Working with image and video files

is an open source computer vision library which can do a lot of cool stuff. As beginners we use it for:

is an open source computer vision library which can do a lot of cool stuff. As beginners we use it for:

- Read and write image files

- Read and write video files

- Read from video stream like camera

- Display video in native OS window

OpenCV give us a native Matrix object that represents a frame in a video or live feed. We can easily extract an array of unsigned chars from it. In C++ unsigned char hold an integer value from 0 - 255. This number represents the luminosity of a colour or grayscale channel. 0 being complete black and 255 pure white. This is also the same range we use for front-end work with CSS attribute:function ( background-color: rgb(0, 191, 255) ). For the test below we use RGB or Red Green and Blue channels to calculate luminosity ourselves for more areas to optimize. However, for simplicity in explaining Algorthim in the next section, we are going to assume that OpenCV already gives us a greyscale frame, as it has ability to do so.

This means that for a standard HD frame 1920 pixels by 1080 pixels we are given an array with 2,073,600 elements( unsigned char * data[2,073,600];) If we are processing a live feed at 30 frames per second we have a time constraint to process 62,208,000 elements (30 * 2,073,600) per second. From a perspective of a single frame, 1/30 that means have to process a frame under 33 milliseconds so as not to drop any frames.

Algorithm and Pseudocode

The implementation idea of ASCII art, is to break up an image into chunks represented by yellow boxes in the picture below. This means we have to iterate chunks in a line and then we iterate on lines of chunks - green boxes. This will form two outer-most loops in our code.

Then we think of processing a chunk itself. We need to average the value of all pixels within it to a single value. This requires us to iterate over each pixel in a chunk. Added complexity in indexing the pixels arises from the fact that lines of pixels are not contiguous in memory. This iteration forms the 2 inner-most loops in our algorithm.

Once we have a single value we need to map its luminosity to a character we are going to replace it with. The character templates are small images we have in our project folder (Pound.png, AT.png W.png etc.) These are small images 7 by 11 pixels only, and we read all images into 2D array. The dimensions of our character template determines the size of the chunk. We have experimented with different size templates and found that smaller font to be preferable. Once we know which character we want to print we will copy, pixel by pixel from our template into output array of unassigned chars of the same length as our original frame.

Iterating over character template forms the second pair of inner-most loops. The pseudocode to do this is below. And while it may look like we have 4 nested loops the runtime complexity of our algorithm is O(N) since we are iterating over each pixel in an image exactly once. Because we are able to break down work into chunks, their processing can be done independently of each other. This problem can be classified as Embarrassingly parallel problem, however I prefer a new proposed term a perfectly parallel problem. (Source: [1])

vTune Amplifier with OpenMP

vTune Amplifier Overview:

We used vTune Amplifier to profile our code to check it's initial performance.

vTune Amplifier is a code profiler that can identify:

- Where time is being spent during the code’s execution

- Amount of concurrency in the code

- Bottlenecks created by synchronous primitives

vTune Amplifier has multiple analysis it can perform but we analyzed the ASCII art program with the Hotspot Analysis and HPC Performance Characterization.

To preface the following analysis, this was done using a 30 second video at 1080p.

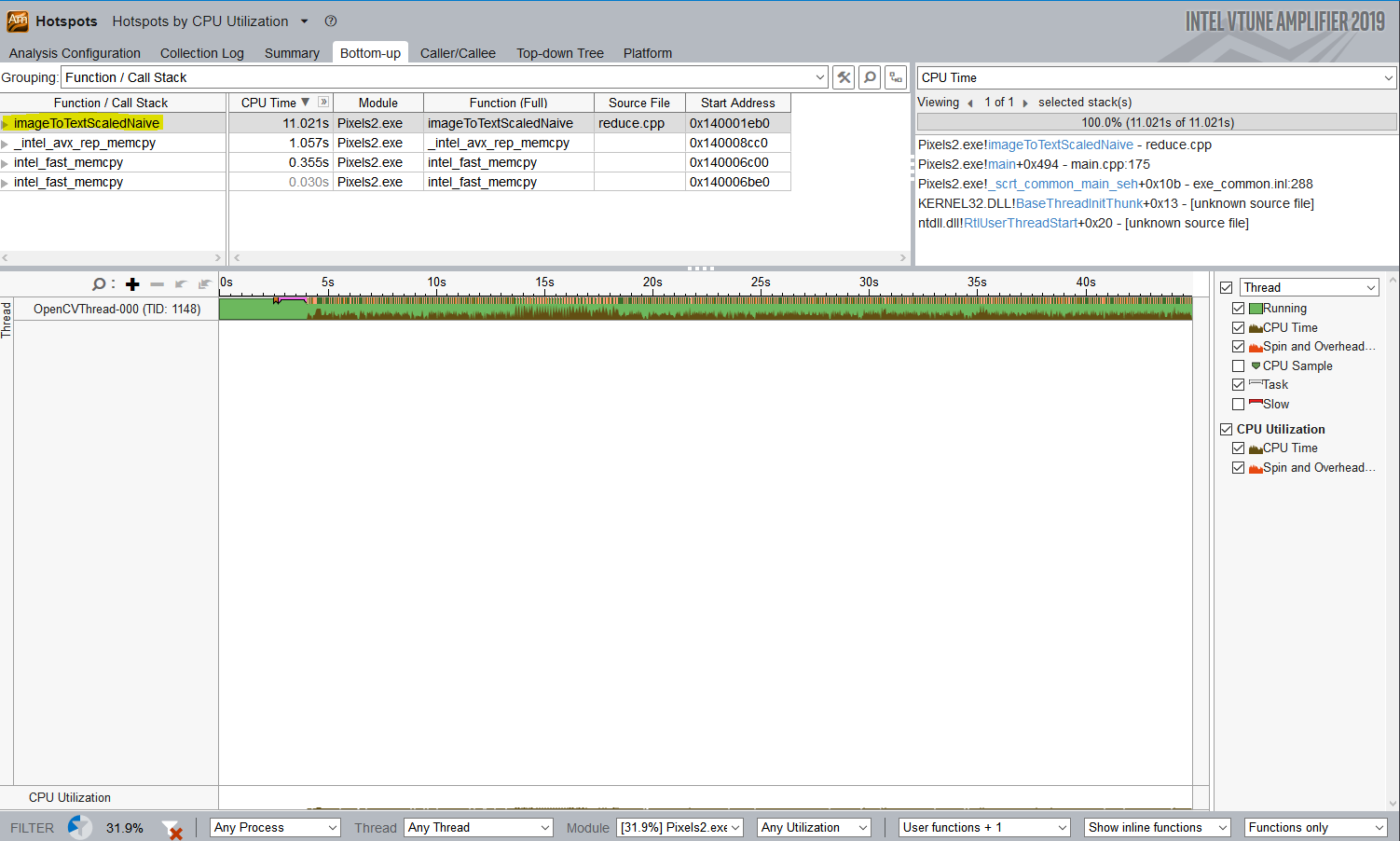

The initial hotspot analysis of our serial code took 44.904 seconds to complete with the imageToTextScaledNative function as the top hotspot.

Looking at the thread visualization pane we can see that only one thread is used by the serial implementation of the function.

To fix this we added the statement:

#pragma omp parallel for

to our code. So referring to our sudocode, the new code looked something like this:

#pragma omp parallel for

for( j ) {

for( k ) {

int sum=0

for(y) {

for(x) {

int index // using j, k x, y

sum += input[index];

}

}

int ave // get average using sum

for(y) {

for(x) {

int index // using j, k x, y

int charIndex // using x, y

output[index] = ascii[charIndex]

}

}

}

}

This improved our overall runtime and reduced it to 36.095 seconds.

Note that our overall CPU time has increased. This is a good sign as that means that we are utilizing our CPU more.

Looking at our thread usage we see that we are using 8 threads instead of 1 and the presence of the omp$parallel attached to our function.

Note the pink section on the master thread. That section represents OpenCV initializing the window the resulting video is displayed on. This is outside the scope of our code so we assume that it is uncontrollable overhead.

The orange blocks show the parallel regions where we process and convert each frame and the gap between is the task where OpenCV grabs each individual frame from the source.

Then we ran our code through the HPC Performance Characterization analysis to verify the efficiency of our OpenMP implementation.

This analysis showed that we could potentially gain 1.311 seconds of runtime if we optimized OpenMP. This occurred because there is an imbalance in the work distribution of our threads which means one or more threads complete their task before the other threads. This means they are idle and wait at the barrier for the other threads to complete their work ultimately resulting in unused processing power.

To fix this we added dynamic scheduling to our code so the workload is dynamically allocated so when one thread finishes their work and there is more work to be done, the thread will pick up more work.

So our pragma statement was changed to:

#pragma omp parallel for schedule(dynamic)

Running our code again through a HPC Performance Characterization analysis yielded these results:

Intel Adviser

What can the Advisor Do:

1. Vectorization Optimization

- Use the cache-aware Roofline Analysis to identify high-impact, under-optimized loops and get tips for faster code.

- Quickly find what's blocking vectorization where it matters most to make the best use of your machine's Single Instruction Multiple Data (SIMD) capabilities.

- Identify where it is safe to force compiler vectorization.

- Use memory analysis to find inefficient memory usage.

2. Thread Prototyping

- Use Threading Advisor to fast-track threading design.

- Its simple workflow lets you quickly model threading designs while delivering the data and tips you need to make faster design and optimization decisions.

(More could be found https://software.intel.com/en-us/advisor)

Our Hotspot analysis from vTune(see previous section for details on vTune) identified that there are two bottlenecks in our code:

- imageToScaleeNaive - the main conversion function that creates image drawn with characters from the original buffer.

- calcLum - averages out the RGB values of a single pixel to convert it to grey scale.

See the details below:

As you can see, calcLum takes the second highest time. However, it does not have a single loop, and really only has a single line of code.

int calcLum(const unsigned char *pixels)

{

return (0.2126 * (int)pixels[2]) + (0.7152 * (int)pixels[1]) + (0.0722 * (int)pixels[0]);

}

My initial thoughts were to take the code form the functions and just put it inside the loop, after all it is only called in a single place in the code and by putting it inside the main function, I would save on memory stack allocation.

In the snippet above, I moved hard-coded variables into constants that are declared before the loop starts as well as assigned each RGB value to an int variable. The last bit is needed because we need to get ASCII codes of each character to get its intensity value.

In doing so, I got the following results:

The above indicates that now we have 3 datatype miss-matches that take additional time on each loop to convert from char to int and then from int to float for the final calculation of the sum.

Solution

Unfortunately, sometimes there is no way around the datatype conversion, as it is the only way to retrieve the information. So all we can do now is minimize the impact.

The final solution above involves making sure that the data types stay consistent across the calculation by converting chars straight to floats and using float constants, thus only making a single type cast for every bracket pair.

The final solution achieved a speedup of around 200ms on a 10s video clip.

Summary

Our code changes involved:

1. Parallelized our code with the #pragma omp parallel for statement. * This improved our runtime to 5(+- 1 second) seconds of OpenCV overehead + length of video for a 1080p video 2. Added dynamic scheduling with our code * Improvement on load balancing. There was minimal runtime gain on our test videos because initial parallelization already optimized the code to handle frames as fast as they are retrieved 3. Eliminated the data type conversion in our logic * Improvement on memory management. There was minimal runtime gain on our test videos because initial parallelization already optimized the code to handle frames as fast as they are retrieved however the section itself showed a 50% improvement in runtime

To preface our results:

We used OpenCV to process and display our results. This added roughly 5 seconds of overhead added by OpenCV to create the Window and the related processes required to display the resulting video. This is outside of our control.

After our initial parallelization improvements our bottleneck is no longer our code but the speed at which we get frames from the source so our improvements do not improve our runtime with a 1080p video but could help maintain this level of execution on higher resolution videos.